Have you ever heard someone talking about Leaked videos, Leaked MMS, someone being harassed over their edited private photos or leaked private photos, these are some common things which we listen and ignore but in reality you don’t know its significance. Here comes the term Non-Consensual sharing of Intimate images(i.e. NCSII).

The NCSII represents a deep violation of an individual’s privacy and personal autonomy. This act involves distribution of sexual or nude media, including photographs and videos, without the consent of the person depicted.

The digital age has fueled a rapid growth of a new and a more dangerous forms of NCSII, most notably the arise of Deepfakes and Morphed images. Deepfake pornography utilizes Artificial Intelligence (AI) to generate sexually explicit content featuring individuals. These synthetic/artificial images and videos are becoming increasingly realistic and difficult to distinguish from original content. Morphed images, on the other hand, involve the alteration/editing of existing photographs to depict a person as nude or engaged in sexual acts. This have become alarmingly widespread, with a staggering 96-99% of deepfakes and morphed being pornographic and overwhelmingly targeting Women. Tools for creating these kind of deceptive images are becoming increasingly accessible, various apps and Telegram bots are commonly available worldwide, minimizing the barrier for oppressor/abuser.

This abuse is not limited to personal relationships but can also be employed as a tool for control, blackmail, humiliation, intimidation, and harassment. The motivations behind these acts are diverse, extending beyond mere revenge to include hate, defaming community, showing superiority above individual or community, financial gain, the pursuit of notoriety(striving to become popular, for something negative), and even exploiting someone sexually by threatening.

1. The Nature of NCSII: Global and Regional Statistics:

The prevalence of Non-Consensual Sharing of Intimate Images(i.e. NCSII) is a significant global concern, with studies revealing varying rates. Among young adults, global estimates of NCSII victimization range from 1% to as high as 32%, in general, victimization rates are estimated to be between 1% and 6% (These are not precise due to inconsistencies in research methodologies.)

A comprehensive study conducted across ten countries provided a more standardized measure, finding that over one in five (22.6%) adults reported experiencing Image-Based Sexual Abuse (i.e. IBSA). Within the US, a significant portion of the adult population has been affected, with reports indicating that 1 in 12 adults identify as victims, Another study in the US found that 8% of adults have had their nude or sexual images shared without their consent. In terms of perpetration, offending rates among young adults range from 3% for behaviors like forwarding or posting images to 24% for any kind of nonconsensual sharing. A study focused on the US found that 1 in 20 individuals reported engaging in Non-Consensual Discloser of Intimate Image (i.e. NCDII). Furthermore, a cross-national study involving the UK, Australia, and New Zealand revealed that 17.5% of respondents had engaged in at least one form of IBSA perpetration.

Table 1: Global Prevalence of IBSA Victimization by Country (Percentage of Respondents)

| Country | Women (95% CI) | Men (95% CI) | Total (95% CI) |

|---|---|---|---|

| Australia | 22.5% (19.8-25.4) | 26.6% (23.2-30.2) | 24.5% (22.3-26.7) |

| Belgium | 13.4% (11.2-15.7) | 18.4% (15.6-21.4) | 15.9% (14.2-17.8) |

| Denmark | 16.2% (13.8-18.8) | 18.7% (16.1-21.5) | 17.6% (15.8-19.5) |

| France | 15.1% (12.7-17.6) | 18.2% (15.6-21.1) | 16.7% (14.9-18.6) |

| Mexico | 29.4% (26.5-32.5) | 29.9% (26.7-33.1) | 29.8% (27.7-32.0) |

| Netherlands | 13.5% (11.4-15.9) | 15.9% (13.4-18.6) | 15.0% (13.4-16.8) |

| Poland | 12.5% (10.4-14.7) | 14.6% (12.2-17.3) | 13.6% (12.0-15.3) |

| South Korea | 24.0% (21.0-27.2) | 13.6% (11.5-16.1) | 18.8% (16.9-20.8) |

| Spain | 18.7% (16.3-21.4) | 18.6% (16.0-21.5) | 18.7% (16.9-20.6) |

| USA | 23.8% (21.3-26.5) | 24.8% (21.5-28.3) | 24.2% (22.2-26.3) |

2. Revenge P*rn or Non-Consensual Intimate Image Abuse:

The term “Revenge p*rn” or “Non-Consensual Intimate Image Abuse” has been commonly used, it is increasingly recognized as a misnomer, as the motivations behind this abuse are diverse and not only driven by revenge. Perpetrators may be acting out of spite, desire for profit, or even for entertainment purposes. Furthermore, the term “Revenge P*rn” can be misleading as it incorrectly implies that the victim has somehow provoked or deserved the abuse.

NCSII is on the rise in low- and middle-income countries as they become increasingly digitized, suggesting that increased access to technology without adequate digital safety measures can exacerbate the problem especially in societies with limited awareness around digital consent. In Nigeria survey indicated that nearly 30% of respondents had experienced image-based sexual abuse, and 7 out of 10 girls in focus group discussions knew someone who had been affected. In UK, 1 in 14 adults have experienced threats to have their intimate images shared, underscoring the prevalence of both threatened and actual dissemination. The UK’s Revenge Porn Helpline has witnessed a drastic tenfold increase in cases between 2019 and 2023, reaching nearly 19,000 cases in 2023. Within India, Odisha and Kolkata recorded the highest number of reported cases of morphed photos of women in 2022, indicating a regional hotspot for this specific form of abuse.

3. Deepfakes and Morphed Images: A Growing Threat:

The advent and increasing sophistication of artificial intelligence have led to a dramatic rise in the creation and distribution of deepfake p*rnography, posing a significant and evolving threat. Reported by MyImaeMyChoice as of January 2023, a staggering 270,000+ deepfakes were identified online, accumulating over 4 billion views, marking an astonishing 3000% increase since 2019. In 2023 alone, over 95,000 deepfake videos were circulated online. There has been tenfold increase in deepfake fraud worldwide between 2022 and 2023. One study state that 98% of deepfake fall into p*rnographic category, which is consistent with 2019 study where 96% were in it. The accessibility of tools used for it is a big concern, platforms like telegram have at least 50 bots that are used for deepfakes with over 4 million monthly users.

4. Motivations Behind the Abuse: Unpacking the Perpetrators’ Intent:

The term “revenge porn” suggests it is the primary driver behind the NCSII, the reality is way far, it plays a role but not only which promotes. An urge of profit, desire of notoriety, distorted entertainment (i.e. exploitation), defaming, playing with someone’s sentiments, targeting communities etc. NCSII can be deployed as a means to exert power and control over the victim, to inflict distress and humiliation, or even to influence ongoing legal proceedings.

Financial gain has emerged as a significant motivator, particularly with the rise of sextortion. Sextortion involves threatening to share nude or explicit images unless the victim provides more such images or, increasingly, monetary compensation. Financial sextortion is on a concerning upward trajectory, with analyses indicating that as many as 79% of predators involved in sextortion are primarily seeking money rather than further sexual imagery.

In some cases, NCSII is driven by hate, specifically targeting women of particular communities. Instances have been documented where morphed photos and online “auctions” of Muslim women in India have been used as a form of targeted harassment and communal intimidation. This type of abuse aims to silence these women and send a broader message of intimidation to their entire community. More broadly, marginalized groups, including religious minorities, are disproportionately affected by online violence. Women of color are also significantly more likely to be targeted with online abuse compared to white women.

5. Methods of Dissemination: Where the Images Spread

Disclaimer: This section mentions platforms and types of websites solely for awareness and educational purposes. We do not promote or endorse any platform involved in the spread of NCSII. The goal is to highlight how this abuse spreads and the need for stronger safeguards.

NCSII content spreads rapidly across multiple digital platforms:

Social Media Platforms such as Facebook, Instagram, X (formerly Twitter), and Reddit are often used due to their vast user networks. Victims may be tagged directly, causing public exposure. While these platforms have policies and AI tools to detect and remove NCSII, gaps still exist.

P*rnographic Websites remain a major issue, with some sites reportedly removing thousands of non-consensual images. However, thousands of others continue to host and profit from such content. The sheer scale of the problem is further underscored by the existence of over 9,500 websites dedicated to promoting and profiting from the distribution of NCSII.

Encrypted Messaging Apps like Telegram and Discord have become hotspots for deepfake sharing, often via bots and private groups. Their anonymity makes detection and enforcement difficult.

6. The Devastating Impact on Victims: Psychological, Social, and Economic Consequences:

The experience of having intimate images shared without consent inflicts profound and far-reaching harm on victims, manifesting in severe psychological, social, and economic consequences. I remember one of my Sister’s friend images were stolen from her google drive and this was done by a one sided lover of her about whom she had no idea, after multiple request, legal actions and monetary transfer we were able to get back access of photos and videos and later legal actions were taken against him.

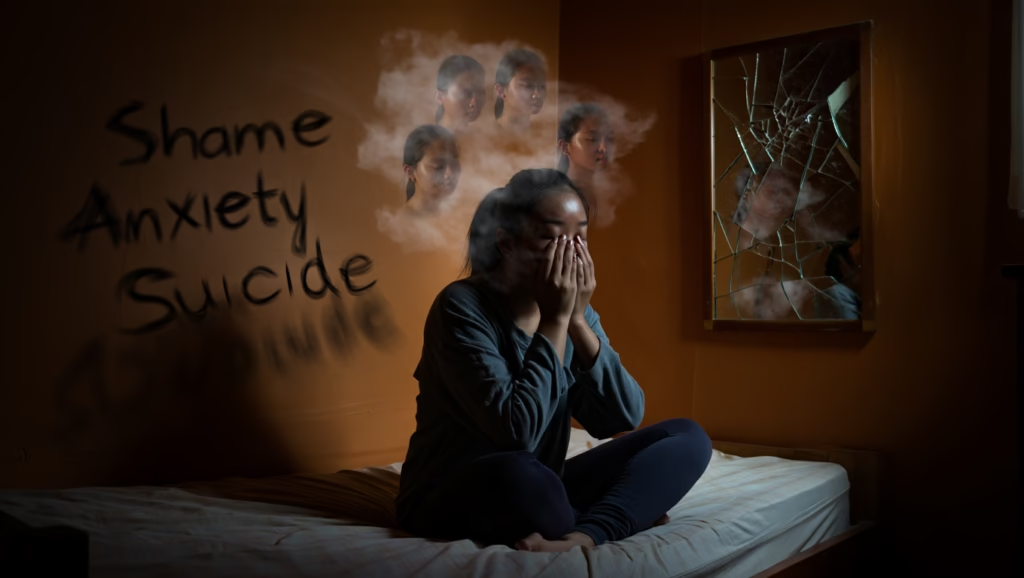

Many victims grapple with overwhelming anxiety, flashbacks, and may even contemplate or attempt suicide. Beyond this victim often face social isolation, profound shame, and significant damage to their life. The fear of judgment from friends, family members, and even employers can be a constant source of anxiety. In some cases, survivors have died by suicide after discovering their deepfake videos floating.

Table 2: Impact of NCSII on Victims (Percentage Reporting)

| Impact | Percentage |

|---|---|

| Significant Emotional Distress | 93% |

| Significant Impairment in Social, Occupational, or Other Important Areas of Functioning | 82% |

| Sought Psychological Services | 42% |

| Relationships with Family Jeopardized | 34% |

| Relationships with Friends Jeopardized | 38% |

| Lost a Significant Other/Partner | 13% |

| Teased by Others | 37% |

| Harassed or Stalked Online by Users Who Saw Their Material | 49% |

| Harassed or Stalked Outside of the Internet by Users Who Saw Their Material Online | 30% |

| Fear of Losing a Current or Future Partner | 40% |

| Fear Their Current and/or Future Children Discovering the Material | 54% |

| Had to Close Down an Email Address and Create a New One | 25% |

| Had to Create a New Identity (or Identities) Online | 26% |

| Had to Close Their Facebook Account | 26% |

| Had Difficulty Focusing on Work or at School | 54% |

| Had to Take Time Off from Work or Take Fewer Credits/a Semester Off from School | 26% |

| Quit Their Job or Dropped Out of School | 8% |

| Had Difficulty Getting a Job or Getting Into School | 13% |

| Fear Their Professional Reputation Could Be Tarnished in the Future | 55% |

| Often or Occasionally Fear How This Will Affect Their Professional Advancement | 57% |

| Feel They Have Something to Hide from Potential Employers | 52% |

| Say This Has Affected Their Professional Advancement in Terms of Networking | 39% |

| Have Had Suicidal Thoughts | 51% |

7. Community and Demographic Variances in Victimization:

The prevalence and impact of non-consensual sharing of intimate images vary significantly across different communities and demographic groups, revealing patterns of vulnerability and targeted abuse. Gender plays a complex role, with women disproportionately affected by sexual abuse in many respects. Age is another critical factor influencing victimization. Younger adults, particularly those aged 18-34, consistently report higher rates of harassment. There is clear evidence of targeted harassment against specific communities through the use of morphed images. Muslim women in India face disproportionately high rates of online trolling and the creation and dissemination of morphed photos. Amnesty International found that Muslim women in India were subjected to 94.1% more online trolling compared to women from other ethnicities and religions. The “Bulli Bai”, “Sulli deals” and other Instagram pages in India specifically target Muslim women journalists and activists, highlighting the use of NCSII as a tool for religious and gender-based hate.

8. Financial Sextortion: A Closer Look at Monetary Exploitation:

Financial sextortion has emerged as a significant and rapidly growing form of online exploitation. Cases of sextortion, the act of threatening to share nude or explicit images, have increased exponentially in recent years. By the end of 2022, the FBI reported a tenfold increase in sextortion reports. Analysis of reports submitted to the National Center for Missing and Exploited Children (NCMEC) estimates that they receive approximately 812 sextortion reports each week, with 556 of these likely being financially motivated. The primary motivation behind financial sextortion is monetary gain, they may also issue threats of physical harm to the victim or their loved ones if their demands are not met.

What to Do If You’re Targeted:

1. Stay Calm Don’t panic and Do not pay. Paying rarely stops the threats.2. Preserve evidence, try taking more time so you can take action. Try contacting people near you, don’t be ashamed as it’s not you.3. Report it. Contact NCMEC, Cyber Crime Portals, or local authorities.

9. Legal and Platform Responses: Addressing the Challenge, How can you get your morphed images removed:

Various efforts can be taken to combat the malicious use of nude morphed and deepfake photos of women, these involve a combination of legal actions taken by online platforms. Many platforms have explicit policies prohibiting revenge p*rn and the NCSII. Meta, the parent company of Facebook and Instagram, uses AI to detect and block the sharing of intimate images and has dedicated specialized teams to combat sextortion. YouTube reviews reported content around the clock. While these platforms often rely on user reporting to identify violating content , the effectiveness and speed of enforcement can be varied. Although some platforms voluntarily remove NCII content upon notification.

Various Initiatives like StopNCII.org and Take It Down are playing a crucial role in combating spread of NCSII. These Platforms use various Technology to create unique encrypted digital prints of an intimate image, these digital prints are then shared with various participating companies like Meta, TikTok, OnlyFans, etc, and these companies use the print to remove matching content before it is widely disseminated. Where, Take It Down, an initiative by the National Center for Missing and Exploited Children (NCMEC), focuses on preventing the spread of young people’s intimate images, including those generated by AI. These proactive tools have demonstrated significant success in removing non-consensual intimate images from the internet, with StopNCII.org reporting over a 90% removal rate. Interestingly, in some cases, utilizing copyright law to claim ownership of an image has proven more effective in achieving content removal than reporting it as non-consensual nudity, suggesting that alternative legal avenues may need to be explored. In India, the Delhi High Court has required intermediaries to remove all NCII of a victim, not just the links provided by the victim, illustrating efforts towards greater accountability.

1- What to do if your photo is being leaked

2- How to remove photos if someone stole

3- how to complain if someone is harassing with my edited images.

4- How to delete nude photos from social media platforms

5- where to complain about AI generated images.

6- how to complain if someone is asking money for my morphed images

7- My private images are on social media, how to delete.

*- Kisi ne meri photo edit karke google pe daaldi aur mujhse paise mang rha

2- How to remove photos if someone stole

3- how to complain if someone is harassing with my edited images.

4- How to delete nude photos from social media platforms

5- where to complain about AI generated images.

6- how to complain if someone is asking money for my morphed images

7- My private images are on social media, how to delete.

*- Kisi ne meri photo edit karke google pe daaldi aur mujhse paise mang rha

Be safe, Be Cautious, And don’t Blame victims, everyone has their personal life what they do or how they do, sick are those who misuse them, but also try to take safe measures from yourself as well.

🙌 Let’s Stand Together Against NCSII

The fight against Non-Consensual Sharing of Intimate Images begins with awareness and empathy. Today is someone else tomorrow can be you or your loved one.

- 📢 Share this post with friends, family, and social circles to raise awareness.

- 💛 Support victims with kindness, not judgment.

- 🚨 Report any NCSII content you come across — don’t scroll past it, report it, maybe you can save someone’s life.

- 🛡️ Advocate for stronger digital safety laws and demand accountability from platforms.

Every share counts. Every voice matters. Let’s make the internet safer for everyone. And help our people stay healthy and alive.

1 thought on “NCSII – The Dark side of Technology: Leaked, Morphed, and Deepfakes”

Pingback: Leaked Photos: Here's how you can remove your leaked photos from The Internet - WayFonix